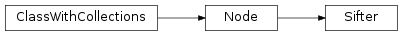

mvpa2.generators.base.Sifter¶

-

class

mvpa2.generators.base.Sifter(includes, *args, **kwargs)¶ Exclude (do not generate) provided dataset on the values of the attributes.

Notes

Available conditional attributes:

calling_time+: Time (in seconds) it took to call the noderaw_results: Computed results before invoking postproc. Stored only if postproc is not None.

(Conditional attributes enabled by default suffixed with

+)Examples

Typical usecase: it is necessary to generate all possible combinations of two chunks while being interested only in the combinations where both targets are present.

>>> from mvpa2.datasets import Dataset >>> from mvpa2.generators.partition import NFoldPartitioner >>> from mvpa2.base.node import ChainNode >>> ds = Dataset(samples=np.arange(8).reshape((4,2)), ... sa={'chunks': [ 0 , 1 , 2 , 3 ], ... 'targets': ['c', 'c', 'p', 'p']})

Plain ‘NFoldPartitioner(cvtype=2)’ would provide also partitions with only two ‘c’s or ‘p’s present, which we do not want to include in our cross-validation since it would break balancing between training and testing sets.

>>> par = ChainNode([NFoldPartitioner(cvtype=2, attr='chunks'), ... Sifter([('partitions', 2), ... ('targets', ['c', 'p'])]) ... ], space='partitions')

We have to provide appropriate ‘space’ parameter for the ‘ChainNode’ so possible future splitting using ‘TransferMeasure’ could operate along that attribute. Here we just matched default space of NFoldPartitioner – ‘partitions’.

>>> print par <ChainNode: <NFoldPartitioner>-<Sifter: partitions=2, targets=['c', 'p']>>

Additionally, e.g. for cases with cvtype > 2, if balancing is needed to be guaranteed (and other generated partitions discarded), specification could carry a dict with ‘uvalues’ and ‘balanced’ keys, e.g.:

>>> par = ChainNode([NFoldPartitioner(cvtype=2, attr='chunks'), ... Sifter([('partitions', 2), ... ('targets', dict(uvalues=['c', 'p'], ... balanced=True))]) ... ], space='partitions')

N.B. In this example it is equivalent to the previous definition since things are guaranteed to be balanced with cvtype=2 and 2 unique values requested.

>>> for ds_ in par.generate(ds): ... testing = ds[ds_.sa.partitions == 2] ... print list(zip(testing.sa.chunks, testing.sa.targets)) [(0, 'c'), (2, 'p')] [(0, 'c'), (3, 'p')] [(1, 'c'), (2, 'p')] [(1, 'c'), (3, 'p')]

Attributes

descrDescription of the object if any pass_attrWhich attributes of the dataset or self.ca to pass into result dataset upon call postprocNode to perform post-processing of results spaceProcessing space name of this node Methods

__call__(ds[, _call_kwargs])The default implementation calls _precall(),_call(), and finally returns the output of_postcall().generate(ds)Validate obtained dataset and yield if matches get_postproc()Returns the post-processing node or None. get_space()Query the processing space name of this node. reset()set_postproc(node)Assigns a post-processing node set_space(name)Set the processing space name of this node. Parameters: includes : list

List of tuples rules (attribute, uvalues) where all listed ‘uvalues’ must be present in the dataset. Matching samples or features get selected to proceed to the next rule in the list. If at some point not all listed values of the attribute are present, dataset does not pass through the ‘Sifter’. uvalues might also be a

dict, see example above.enable_ca : None or list of str

Names of the conditional attributes which should be enabled in addition to the default ones

disable_ca : None or list of str

Names of the conditional attributes which should be disabled

space : str, optional

Name of the ‘processing space’. The actual meaning of this argument heavily depends on the sub-class implementation. In general, this is a trigger that tells the node to compute and store information about the input data that is “interesting” in the context of the corresponding processing in the output dataset.

pass_attr : str, list of str|tuple, optional

Additional attributes to pass on to an output dataset. Attributes can be taken from all three attribute collections of an input dataset (sa, fa, a – see

Dataset.get_attr()), or from the collection of conditional attributes (ca) of a node instance. Corresponding collection name prefixes should be used to identify attributes, e.g. ‘ca.null_prob’ for the conditional attribute ‘null_prob’, or ‘fa.stats’ for the feature attribute stats. In addition to a plain attribute identifier it is possible to use a tuple to trigger more complex operations. The first tuple element is the attribute identifier, as described before. The second element is the name of the target attribute collection (sa, fa, or a). The third element is the axis number of a multidimensional array that shall be swapped with the current first axis. The fourth element is a new name that shall be used for an attribute in the output dataset. Example: (‘ca.null_prob’, ‘fa’, 1, ‘pvalues’) will take the conditional attribute ‘null_prob’ and store it as a feature attribute ‘pvalues’, while swapping the first and second axes. Simplified instructions can be given by leaving out consecutive tuple elements starting from the end.postproc : Node instance, optional

Node to perform post-processing of results. This node is applied in

__call__()to perform a final processing step on the to be result dataset. If None, nothing is done.descr : str

Description of the instance

Attributes

descrDescription of the object if any pass_attrWhich attributes of the dataset or self.ca to pass into result dataset upon call postprocNode to perform post-processing of results spaceProcessing space name of this node Methods

__call__(ds[, _call_kwargs])The default implementation calls _precall(),_call(), and finally returns the output of_postcall().generate(ds)Validate obtained dataset and yield if matches get_postproc()Returns the post-processing node or None. get_space()Query the processing space name of this node. reset()set_postproc(node)Assigns a post-processing node set_space(name)Set the processing space name of this node. -

generate(ds)¶ Validate obtained dataset and yield if matches